Published :7/22/2020 8:25:23 AM

Click Count:2103

The University of Florida and its alumni, Chris Malachowsky, the co-founder of Nvidia, will invest $45 million.

In addition, the university will also use NVIDIA chips to enhance its existing supercomputer HiPerGator, which will be put into use in early 2021.

Nvidia has long been regarded as a supplier of PC graphics chips, which can make video games look more realistic. But today, researchers are also using Nvidia chips in data centers to speed up training computers to recognize images and other artificial intelligence calculations.

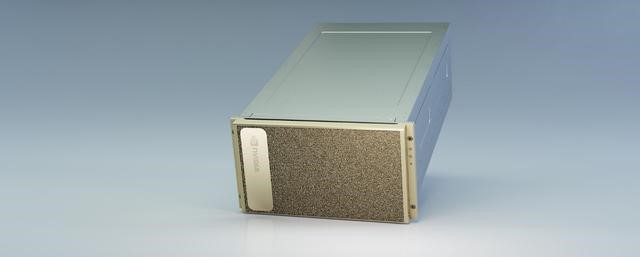

In terms of AI, NVIDIA launched the NVIDIA DGX™ A100 at the GTC 2020 conference, which is the third-generation product of the world’s most advanced AI system, with up to 5 Petaflops of AI performance, and for the first time integrated the performance and functions of the entire data center into one In a flexible platform.

Immediately, the DGX A100 system has begun to be supplied globally. The first orders will be sent to the Argonne National Laboratory of the U.S. Department of Energy (DOE). The laboratory will use the cluster's AI and computing power to better research and respond to COVID-19.

Huang Renxun, founder and CEO of NVIDIA said: "NVIDIA DGX A100 is a high-performance system designed for advanced AI. NVIDIA DGX is the first to build an end-to-end machine learning workflow-from data analysis to training to inference. AI system. With the huge performance leap of the new DGX, machine learning engineers can stay ahead of the exponential growth of AI models and data.

The DGX A100 system integrates 8 new NVIDIA A100 Tensor Core GPUs, 320GB of memory for training the largest AI data set, and the latest high-speed NVIDIA Mellanox® HDR 200Gbps interconnect.

Utilizing the multi-instance GPU function of the A100, each DGX A100 system can be divided into up to 56 instances to accelerate the processing speed of multiple small workloads. With these functions, enterprises can optimize computing power and resources according to their own needs on a fully integrated software-defined platform, and accelerate the speed of various workloads such as data analysis, training, and inference.